Infrastructure drift - the divergence between the desired state defined in your Infrastructure as Code (IaC) and the actual state in your cloud environment - is a common challenge in modern cloud operations. While it might seem straightforward to simply lock down all manual changes, the reality of cloud operations often demands more nuanced approaches.

Why Drift occurs

Infrastructure drift typically happens when:

- Engineers need to quickly test or validate configurations directly in the cloud

- Emergency fixes are applied manually during incidents

- Third-party tools or services modify resources

- Team members make "temporary" changes that become permanent

Control vs. Flexibility

There are two common approaches to handling infrastructure changes:

-

Strict Control: Blocking all manual changes and requiring everything to go through IaC pipelines

- Pros: Consistent, traceable, and version-controlled changes

- Cons: Slower development cycles, reduced flexibility for testing and emergencies

-

Full Freedom: Allowing direct modifications to infrastructure

- Pros: Rapid testing and development, quick emergency responses

- Cons: Configuration drift, undocumented changes, potential compliance issues

Neither extreme is ideal for most organizations. The solution lies in finding a middle ground that enables both control and empowerment. Usually you use both modes for different environments. Production Environments usually should follow strict control, while Development Environments might be more open for manual changes.

Another approach for a common middle ground: Drift Detection

I recently implemented a solution for a customer that provides a good balance between control and freedom. I am talking about drift detection. It is possible to just validate that the infrastructure is matching the code, and then handle those differences or drifts in a timely manner.

The biggest problem from infrastructure drifts comes with the delay between change and the moment someone finds the drift. Usually, by the time someone finds differences, nobody knows why changes have been done in the first place. So detecting drift early is a simple way to minimize the impact of manual changes.

With terraform this is straight forward. Depending on your setup it boils down to this:

- Schedule a Pipeline

- Run

terraform plan -detailed-exit-codeon all environments - Process the results

- Notify someone / or review results manually

Example Implementation

An example implementation could look like this:

script: |

terraform plan -input=false -detailed-exit-code -no-color -out tfplan

$STATUS=$?

terraform show -no-color tfpaln > tfplan.txt

SUMMARY=$(grep -E "Plan:|No changes" tfplan.txt || echo "Error retrieving summary")Now you can process $STATUS and $SUMMARY as you like. One great option is to use Azure DevOps built in Test Result support. This is not what they are intended for, but the results provide a great way to visualize the drift detection results directly in Azure DevOps.

I used a simple python script to create a JUnit compatible XML file for the test results

...

testcase = ET.SubElement(

testsuite,

"testcase",

{"classname": "Infrastructure.Drift", "name": result["name"]},

)

if result["status"] == "0":

success = ET.SubElement(testcase, "success", {"message": result["summary"]})

success.text = f"No drift detected in {result['name']}"

elif result["status"] == "2":

failure = ET.SubElement(testcase, "failure", {"message": result["summary"]})

failure.text = f"Drift detected in {result['name']}"And afterwards upload the generated file to Azure DevOps.

- task: PublishTestResults@2

inputs:

testResultsFormat: 'JUnit'

testResultsFiles: '$(Pipeline.Workspace)/test-results.xml'

testRunTitle: 'Drift Detection Results'

displayName: 'Publish Test Results'Result

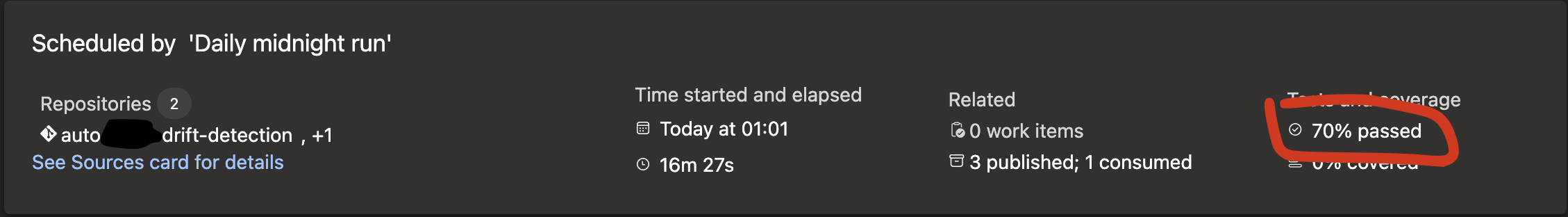

The Pipeline results show how much percent of the Environments have drifts.

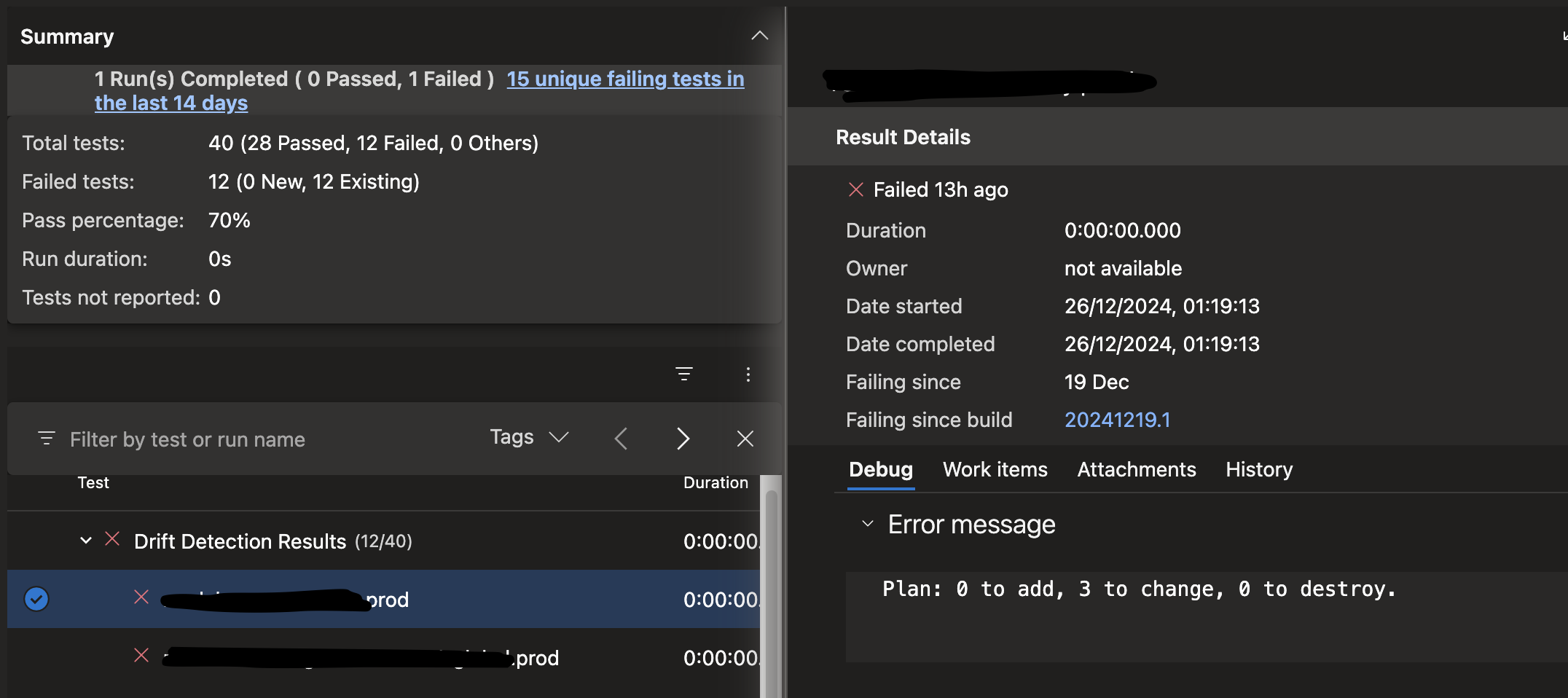

And the details show the summary as error message.

Dependent on your process, you can now work with those results. Either create tickets for them, manually review them when required, or automatically notify the Team. In our case, the pipeline was setup to fail if drifts are detected, and send a notification using the Azure DevOps integrated notification system.